record system in pulseaudio (CLI) :: ALSA configuration :: best disable link so far (3/2014)

Linux sound management and the changing nature of Linux initialization schemes are the bad things about Linux. In this post, it's about sound. PulseAudio, on the scene since perhaps 2009, but currently (2014) prevalent, has made the sound kludge even worse1.

OSS - still good

My Linux use began when OSS was the only sound daemon communicating with hardware. OSS had bugs, but it was straightforward, and therefore was a good foundation which should have been developed further instead of dumped.I'm not a recording engineer, but I never encountered the two main purported limitation of OSS, 1) an inability to process simultaneous sound sources (, eg. capture a mic input at the same time as a music stream) nor 2) a supposed incapacity to split a single sound source into multiple types of files simultaneously.

When I wanted to listen to several files through the speakers and to, simultaneously, capture the output (stdout) into a single WAV file, I piped them through sox. For example, in this case, I created a script which played several audio files in sequence, and I used sox to collate the output into a single file:

$ somescript.sh | sox -t ossdsp -w -s -r 44100 -c 2 /dev/dsp foobarout.wavThat's all there was to it.

ALSA - meh

When ALSA became common, the the simple approach of /dev/dsp was gone. In ALSA, we had to locate soundcard info with aplay-l, aplay -L, /proc/asound/pcm AND /dev/sound/. Some software couldn't handle the ALSA layer, and we'd have to name the device. Only now it wasn't generically, /dev/dsp. So we'd have to research its PCI values and provide literal device information such as "hw:0,0". Another problem was lost hours configuring daemons. As users, we'd sort of have to choose between OSS or ALSA and be certain we'd blackballed the modules of the other. It's unclear what benefit ALSA provided, in other words.Consider the play/capture scenario I described above. Under ALSA, a similar effect should have been available by researching ALSA commands. That's already wasted time (duplication of effort) to achieve the same outcome, but it also turns-out the the ALSA commands were not as reliable. For example...

$ somescript.sh | arecord -D hw:0,0 -f cd foobarout.wav... often would result in a string of error messages regarding "playback only", even though capturing had been enabled in ALSA, etc. To me, it seemed that ALSA, and not OSS, had multiplexing limitations.

Further, the C code of the ALSA "dmix" lib, which one would think was created exactly to solve this problem gives no respite...

ALSA lib pcm_dmix.c:961:(snd_pcm_dmix_open) The dmix plugin supports only playback streamLol. In the end, the only helpful plugin (of course with nearly zero documentation and an unintuitive name), is the asym plugin. For this you'd have to become aware of it in the first place, not so easy, nor will you escape having to build and tweak an /etc/asound.conf and/or an .asound.rc. Have fun.

PulseAudio

Instead of simply developing either ALSA or OSS more completely, some group developed PulseAudio. PulseAudio purported to make muxing easier than ALSA which purported to make muxing easier than OSS. "Lol". Lol because ALSA and/or OSS continue to be layers underneath PulseAudio. So now we add PulseAudio memory and CPU loads to those of both the OSS or ALSA modules running beneath PulseAudio. These three different directions at once also raise the potential for errors. And if you think PulseAudio makes configuration any easier, take a look here.After PulseAudio configuration, recording my script requires the same steps as capturing streaming. It's too much information to regurgitate entirely here, but the shorthand is:

$ pavucontrol (set "Record Stream from" to "Monitor of Internal Audio Analog Stereo")There is also hypothetically an OSS emulator called "padsp" (install ossp in Arch) with which one could use sox again. That is, PulseAudio apparently uses an emulator instead of just accessing a real OSS module. I haven't tried "padsp", but it may work.

$ somescript.sh | parec --format=s16le --device=(from "pactl list") | oggenc --raw --quiet -o dump.ogg -

$ padsp sox -r 44100 -t ossdsp /dev/dsp foobarout.wav

PulseAudio crippleware effect

Once PussAudio has ever been installed, even inadvertently as a dependency for some other application, and even when you're sure its daemon is not running (), one's soundcard will likely be reduced to analog mode. Eg, after Puss Audio was installed, but its daemon not running, I observe...$ aplay -l...when I should instead see...

**** List of PLAYBACK Hardware Devices ****

card 0: SB [HDA ATI SB], device 0: ALC268 Analog [ALC268 Analog]

Subdevices: 1/1

Subdevice #0: subdevice #0

$ aplay -lResults will be similar in $ arecord -l. There's no way to capture one's system in ALSA properly again until it detects the entire soundcard. If we'd like, we can even see the problem more clearly:

**** List of PLAYBACK Hardware Devices ****

card 0: SB [HDA ATI SB], device 0: ALC268 Analog [ALC268 Analog]

Subdevices: 1/1

Subdevice #0: subdevice #0

card 0: SB [HDA ATI SB], device 1: ALC268 Digital [ALC268 Digital]

Subdevices: 1/1

Subdevice #0: subdevice #0

$ aplay -LThat is, even with PussAudio disabled, ALSA remains infected with the PulseAudio Sound Server, and analog limitations.

null

Discard all samples (playback) or generate zero samples (capture)

default

Default ALSA Output (currently PulseAudio Sound Server)

sysdefault:CARD=SB

HDA ATI SB, ALC268 Analog

Default Audio Device

front:CARD=SB,DEV=0

HDA ATI SB, ALC268 Analog

Front speakers

[remaining 4 entries, all analog dev=0, snipped]

PulseAudio disabling

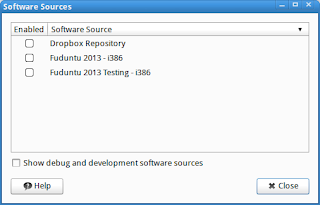

Link: excellent disabling instructionsWe'd like to disable PulseAudio, but we'd prefer not to uninstall PulseAudio --- for example, it's understood that some Gnome sound functions with hooks in PulseAudio libs may not otherwise work. We know from above that it's not enough to simply disable its daemon.

| file | note |

|---|---|

| /etc/pulse/ | dir: pulse audio config files |

| /etc/modprobe.d/alsa-base | configuration for ALSA modules |

| /usr/share/alsa/ | dir: alsa config files |

| /etc/asound.conf | alsa config file for pulse |

| /usr/share/alsa/default.pa | change ALSA hooks |

| /etc/pulse/default.pa | change PulseAudio hooks |

1. $ pulseaudio -k

2. # pacman -r pulseaudio-alsa

3. # rename /usr/share/alsa/alsa.conf.d/*.conf /usr/share/alsa/alsa.conf.d/*.bak

...to be continued.

1 Some configurations even include a Music Player Daemon ("MPD"), and/or a Simple Direct MediaLayer ("SDL"), ridiculous 4th and 5th possible layers.