I have 2.5 other posts on this backup, so nothing in-depth is covered here. The steps I can recall and a few tips. A repeat at the top of the challenges.

The Challenges

It's likely not a mistake that there's a laborious manual process instead of a simple software solution for the common need of backing-up media. I smell entertainment attorneys.

- every backed-up CD becomes a folder of MP3's. To recreate playing a CD, a person would have to sit at their computer and click each MP3 file in CD sequence, or else make the entire CD into a single large file.

- M3U files play MP3 files in sequence, eg in the same sequence as the CD. A large catch (also probably DRM-related) is that M3U files must contain hard links -- a complete system specific path -- to media files for the M3U to function. Thus, any portable, relative link solution is prevented. Further, entering hard links into M3U's must be done manually, and these long links increase the chance for fatigue and entry errors.

- Most browsers disable (probably due to industry pressures) M3U files from opening, and will only download the M3U without playing these laboriously entered MRL links

NB Time: if a person has the real estate, the industry has made it easier to simply leave media on the shelf and pull it off when a person wants to listen. Backing-up a 100 CD collection takes about 75 hrs (4500 mins), ie, about 2 work weeks. It's worth it, of course, if there's any attachment to the collection.

NB Hard Links: an HTML interface will provide access similar to the original physical disks, with a 'forever and in a small space' fillip. However, the first job is to find a browser that will open links to M3U's. This is probably a moving litigation target, but currently Falkon opens them, albeit with an additional confirmation step in each instance.

NB M3U's: these carry a lot of information, in addition to links. Additional listens over the years allow a person to flesh comments on every track, as much as they want, without affecting playback, or being displayed. They are a private mini-blog for the listener to add info, times, additional versions, or to make new M3U mixes, etc. Protect at all costs.

configure (possibly 1 work day)

- partition(s) for external backup disk, probably using XFS these days (2023), and a micro usb.

- fstab is a PITA. It has to be modified to mount the drive you've got the media on, in order for the hard links in M3U's to work. However, a modified fstab will cause boot to fail into maintenance mode if I boot/reboot the system without that drive (I usually specify /dev/sdd for the USB drive) connected.

So at boot, return fstab to default. After boot, remodify fstab with the dev and run "mount -a". Anyway, that's how f'ed up these WIPO organizations have made having a media drive. - Touchscreen and a 3.5mm connector(speakers) + usbc (backed up external)

- consider file structure: music,images, m3u's, booklets art, video versions, slower versions (for mixes, etc).

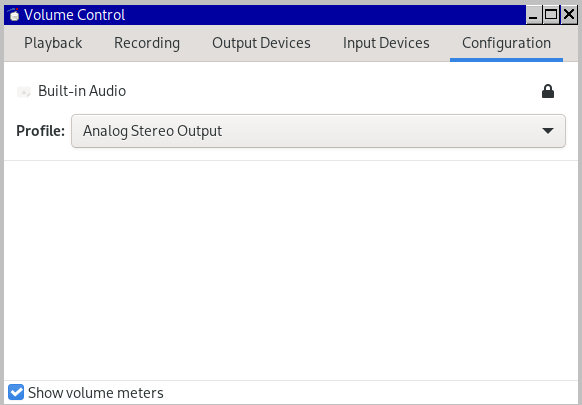

- configure XDG for xdg-open to open M3U's with preferred player

- review/establish ~/.config/abcde.conf and verify an operational CDDB. The CDDB saves at *least* 5 mins per disk. In lieu, must enter all track names and artists, etc.

backing up (30mins per CD)

- abcde the shit to 320kb

$ abcde -d /dev/sr0 -o mp3:"-b 320" -c ~/.config/abcde.conf

- while abcde, scan (xsane) cover art and booklet to 200 or 300 dpi, square as possible

- create PDF of booklet/insert (convert jpgs), and save front for easytag and HTML thumbnails

- << above 3 should be done simultaneously, aiming for 15 mins per disk >>

- easytag the files and clean their names, attach cover jpg/png.

- create m3u's for each disk (geany, gedit, whatever)

- << above 2 roughly 15 mins per disk >>

post-processing (15 mins per CD)

- download a browser that will not block M3U's, eg Falkon.

- start building your HTML page

- enter each file's relevant info into the schema

- create 100x100 thumbnails for faster webpage loading

$ mogrify -format png -path /home/foo/thumbs -thumbnail 100x100 *.jpg

tips

- keep MP3 file names short, since they have to be hand entered into the M3U. Longer names can be in the ID3 tag, and/or M3U file. Both the ID3 info, and especially the M3U file, can accept additional information later, at one's leisure.

- typos waste a lot of time and break links. Cut and paste whenever possible for accuracy.

- leave the HTML interface file continually open in the same text editor as for the M3U's. Geany is an example. There are continual modifications and touch-ups to the HTML page, even as I open and close various M3U's. And a lot of copying and pasting from the M3U's into the HTML file.

- Geany has a search and replace function. When creating the M3U for a CD, a person can copy an existing M3U into the folder of the CD they are working on, and use it for a template. Just rename it for the current CD, and then use the search and replace function to update the all the links inside the M3U with a single click. A person can then start editing the song names without having to do all the hard link information again. Saves time.

- run the scanner every so often without anything and look at result to see if glass needs cleaning

- make an M3U template else continually waste 5 mins eliminating prior entries from the one copied over. Every CD will need an M3U to play all of its songs.

- This is good software cuz it has melt included for combining MP4's