Both of these must be entered for the monitor to remain on during inactivity. Neither command by itself is enough to keep the monitor alive.

$ xset -dpms

$ xset s noblank

With these two entered, the screen will still be receiving a signal, but it's just for the backlight, not for any display conent. If we want the display content to remain during inactivity, we must do the two above AND add the following.

$ xset s off

Do we even need this project? Re the LAPP: "no, but helpful". Re the email: probably "yes, for understanding dashboard alerts". LAPP or any other monolithic CMS (XAMP, LAMP) that require learning PHP might be a waste if we can chain cloud services and so on (eg. read comments under this video).

Since LAPP elements are servers, they typically require user switching, individual configuration, or other permission issues. A separate post will deal with production configuration like that. I wrote this one aiming for a localhost light development environment (besides Docker). Additionally, I've attempted to view each LAPP element independently, in case we learn of an app that requires only one element, eg the PHP server, or just a relational database. I also subbed out the Apache "A" for another "L", lighthttp: LLPP. More commonly though, even browser based apps (eg Nextcloud - go to about 8:30) still use a CMS LAMP, LAPP, LNPP, etc. Electron, Go, Docker, won't be covered here.

LAPP

Linux (L)

For both LAPP and email, verifying /etc/hosts is properly configured for IPv4 and 6 for localhost smoothness. Otherwise we typically already login as some user, so we shouldn't have permission issues if everything else is well configured.

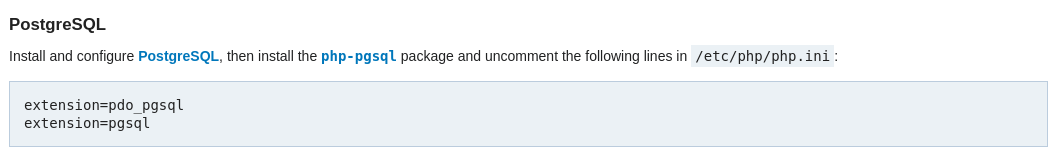

PostgreSQL (P)

# pacman -S postgreqsl

# pacman -Rsn postgresql

Lunatic designers really really really don't want a person running this on their own system under their own username. There's no security advantage to this, just poor design. This is almost more difficult than the design of the database itself.

After installing with pacman, add one's username to the postgres group in /etc/group, then initialize the database. Use...

$ initdb -D /home/foo/postgres/data

...instead of the default:

$ initdb -D /var/lib/postgres/data

Attempt startup.

$ postgres -D '/home/foo/postgres/data'

If starting-up gives the following error...

FATAL: could not create lock file "/run/postgresql/.s.PGSQL.5432.lock": No such file or directory

...run the obvious

# mkdir /run/postgresql

# chown foo:foo /run/postgresql

Just do the above, don't believe anything about editing service files or the postgresql.conf file in /data. None of it works. Don't even try to run it as a daemon or service. Just start it like this:

$ postgres -D '/home/foo/postgres/data'

Now that the server is up and running, you can open another terminal and run psql commands. If you run as user postgres (see below), you'll have admin authority.

$ psql postgres

If a lot of data has been written, a good exit phrase to make sure all is safely written:

$ psql -c CHECKPOINT && pg_ctl stop -m fast

PHP (P)

Link: CLI flags ::

# pacman -S php php-pgsql

# pacman -Rsn php php-pgsql

PHP server setup (8:04) Dani Crossing, 2015. Old, but not outdated.

php.ini basics (7:25) Program With Gio, 2021.

If we've got a dynamic website, we typically need PHP (server) and then some JavScript(browser/client) to help display it. The less JavaScript the better (slows browser). I consulted the Arch PHP Wiki .

standalone PHP - start/stop

Standalone PHP is easier to troubleshoot than when starting Apache simultaneously. Stopping is CTRL-C in the terminal where it was started. Startup with web-typical TCP port 80, however leads to a permission issue.

$ php -S localhost:80

[Fri Oct 23 11:20:04 2020] Failed to listen on localhost:80 (reason: Permission denied)

Port 80 works when used with an HTTP server (Apache, NGINX, etc), but not standalone PHP (not sure why). So, use any other port, eg port 8000, and it works.

$ php -S localhost:8000

[Fri Oct 23 11:20:04 2020] PHP 8.0.12 Development Server (http://127.0.0.1:8000) started

See this to determine when best to use index.html, or index.php. But there are at least 3 ways for PHP to locate the index.html or index.php file:

- before starting the server, CD to the folder where the servable files are located

- specify the servable directory in the startup command

$ php -S 127.0.0.1:8000 -t /home/foo/HTML

$ php -S localhost:8000 -t ~/HTML

- edit /etc/php/php.ini to indicate file locations...

# nano /etc/php/php.ini

doc_root = "/home/foo/HTML"

... however this strategy can lead to mental illness and/or lost weekends: sometimes the ini file will not be parsed. Good luck.

First, take a breath. then verify which ini file is being used.

$ php -i |grep php\.ini

Configuration File (php.ini) Path => /etc/php

Loaded Configuration File => /etc/php/php.ini

If you have modified the correct

ini file, hours and hours of finding the correct syntax for

standalone PHP - configuration and files

Links: PHP webserver documentation :: Arch PHP configuration subsection

PHP helpfully allows us to configure a document root, so I can keep all html files (including index.htm) inside my home directory. The open_basedir variable inside the configuration file (/etc/php/php.ini) is like a PATH command for PHP to find files. Also, when pacman installs PHP dependent programs like phpwebadmin or nextcloud it default links them to /etc/webapps, because this is a default place PHP tries to find them. Even though they are installed into /usr/share/webapps. So if I had a folder named "HTML" inside my home directory, I'd want to at least:

# nano /etc/php/php.ini

open_basedir = /srv/http/:/var/www/:/home/foo/HTML/:/tmp/

email local

Link: simple setup :: another setup :: notmuch MTA setup

$ mkdir .mail

We also need a mail server on the machine but which only sends to localhost and then only to one folder in /home/foo/.mail/ inside localhost. However, instead of setting up local email alerts, why not skip all of that (for now), and run a log analysis program like nagios?

We want to configure the lightest localhost system setup for email that we can read on a browser. Once we can reliably do alerts on, eg. systemd timers, or some consolidating log app (eg,Fluentd), or some Python/Bash script triggers, other things are possible. Our simplest model takes timer script outputs and sends email to the local system (out via SMTP port 25).

CLI suite

Linux has some default mail settings: mail to root is kept at /var/mail/root, user typically in ~/mail. It appears we'll need a webserver, no small email systems have this built-in. Appears Alot can be our MUA. It is available on the repositories, and can be configured with other light localhost services as described here.

cli configuration

browser MUA

Once the above configured, we can use a browser MUA. For Chromium, the Chrome store, has an extension for notmuch, called Open Email Client. configuration information is provided.